I am a founding AI Research Scientist at Project Prometheus, building AI to fundamentally change how we design and engineer the physical world. My work spans generative modeling, model scaling, and building large-scale open scientific benchmarks, with the goal of making computational science faster and more accessible.

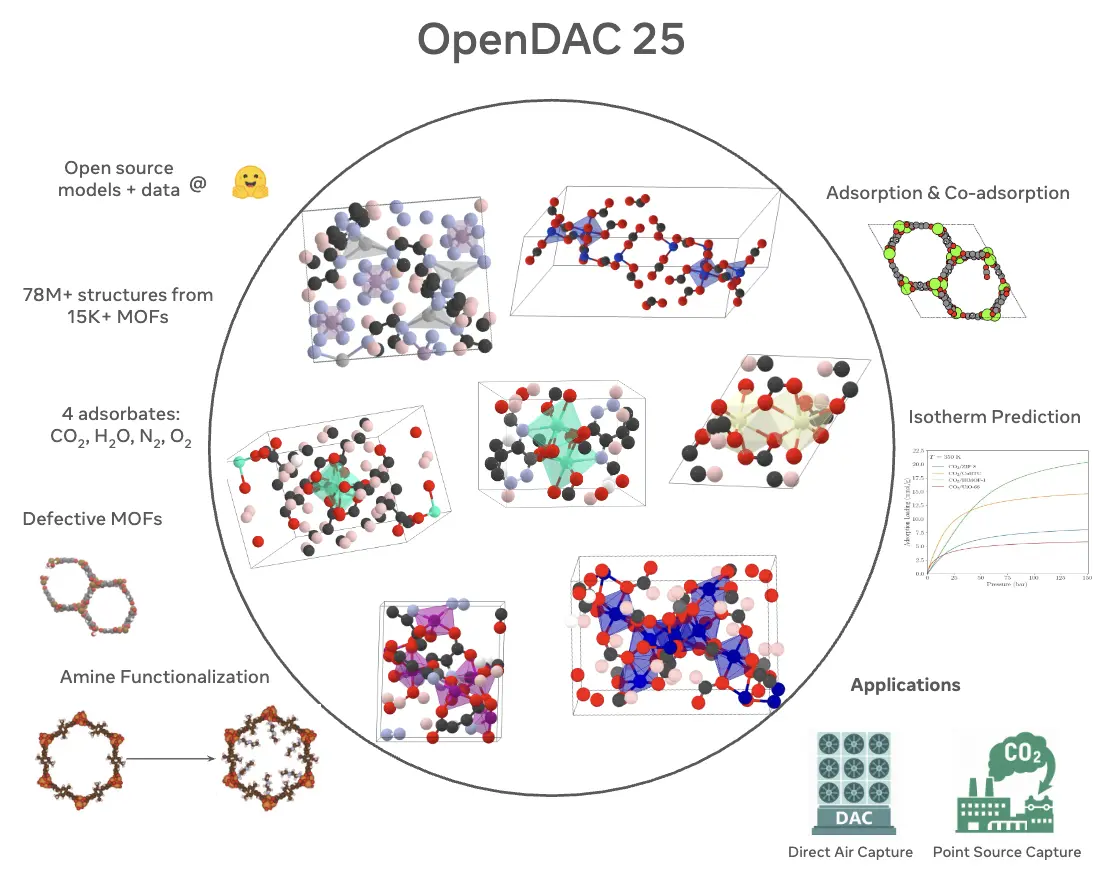

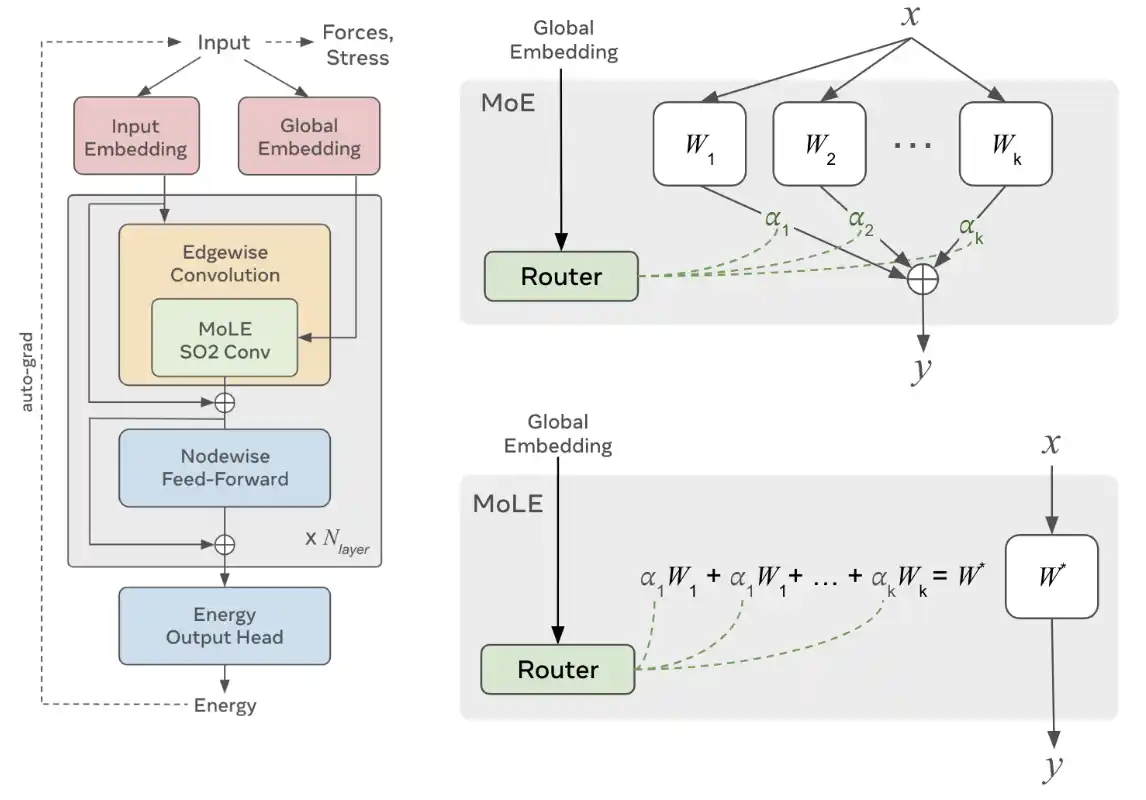

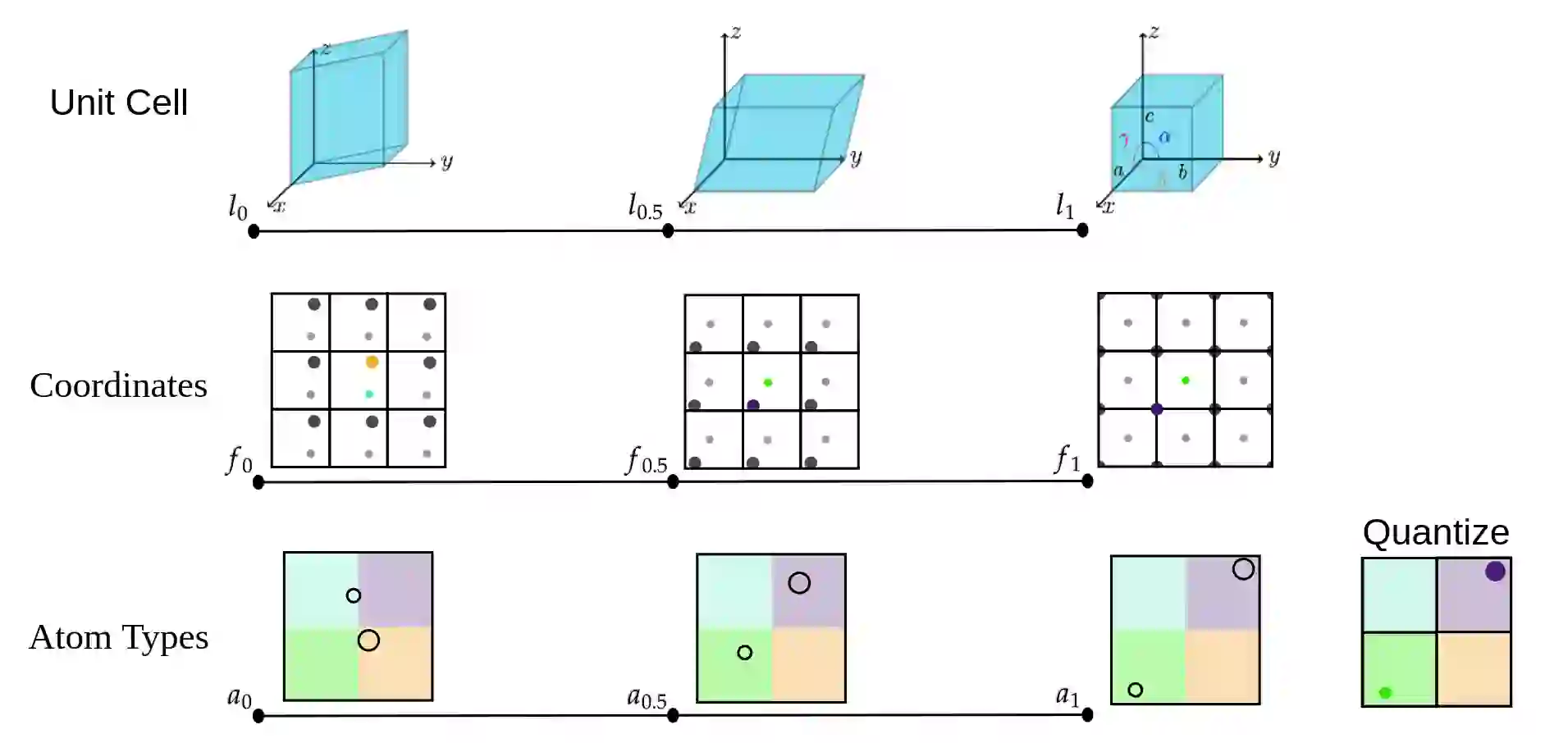

Most recently at Meta FAIR, I led efforts on AI for Science — including universal machine-learning interatomic potentials (UMA), generative models for crystal structure design (FlowLLM, FlowMM), and some of the largest open datasets at the intersection of AI and materials science. Together, these efforts accelerate materials discovery by replacing expensive quantum-mechanical simulations with fast, accurate AI models.

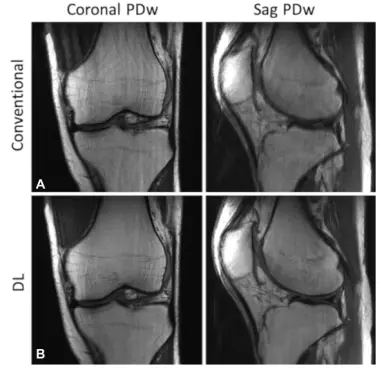

I also led fastMRI, a collaboration between Meta AI and NYU Langone to accelerate MRI scanning using AI. Our methods achieve up to 4x acceleration with no loss in diagnostic accuracy and have become the clinical standard for accelerated MRI worldwide.

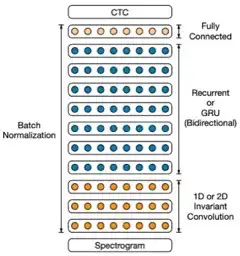

Earlier, I built and led the speech research team at FAIR, where we trained some of the earliest multi-billion parameter speech models and developed self-supervised methods that served billions of users across Meta's products. Before Meta, I was part of the team at Baidu that built Deep Speech 2, one of the first end-to-end neural speech recognition systems.

I hold a Master's in Language Technologies from Carnegie Mellon University, and my research has been widely featured in the popular press.

Selected Publications

-

-

FlowMM: Generating Materials with Riemannian Diffusion/Flow MatchingInternational Conference on Machine Learning (ICML) 2024

-

-

Deep Learning Reconstruction Enables Prospectively Accelerated Clinical Knee MRIRadiology

-

-

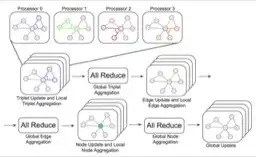

Towards Training Billion Parameter Graph Neural Networks for Atomic SimulationsInternational Conference on Learning Representations (ICLR) 2022

-

-

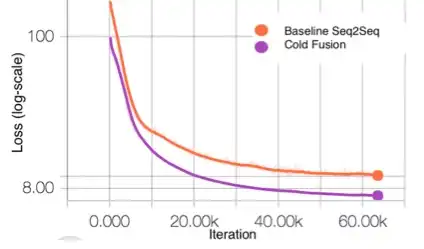

Cold Fusion: Training Seq2Seq Models Together with Language ModelsInterspeech 2018

-

-

Deep speech 2: End-to-end speech recognition in english and mandarinInternational Conference on Machine Learning (ICML) 2016